Introduction

Today, I would like to share how I ended up a cluster for my Notebooks project to make it even more essential for common recurring tasks and jobs.

My goal with this cluster is to enable seamless deployment of applications, dashboards, APIs and CRON jobs

Background and Experience

To understand how I got here, it's essential to know a bit about my background. As a freelance developer, I've had the opportunity to work on various complex projects across different domains:

- Aerospace: Managed a massive Hadoop cluster, which taught me how to handle large-scale data processing efficiently.

- Kubernetes on AWS: Administered multitenant Kubernetes clusters with Istio using Infrastructure as Code (IaC), honing my skills in automation and large-scale infrastructure management.

- B2B E-commerce: Built a custom cluster using Helix and Zookeeper to manage stateful resources, tackling unique challenges in scalability and reliability.

These experiences have equipped me with a pragmatic and innovative approach to problem-solving.

Why I Built a Cluster

As I developed my Note app, I aimed not just to let users write notes and run code but also to empower them to deploy their creations online. This includes full-fledged applications, interactive dashboards, and sophisticated automations. My initial idea was straightforward: upload the binaries generated by the app to a remote server for execution and expose the results via an API. However, I soon encountered several complex questions:

- How to handle scaling across multiple servers?

- How to ensure optimal isolation of execution environments?

- How to configure different access security for each deployed application?

These challenges called for a much more robust and over-engineered approach than just a simple server deployment.

The Ambition to Build a Super-Powerful Cluster

Instead of relying on existing, expensive generic solutions like a Kubernetes or Docker SaaS, I decided to create a custom solution tailored to not only meet my current needs but also adapt to the future demands. Here’s how I engineered this very special cluster.

Cluster Architecture

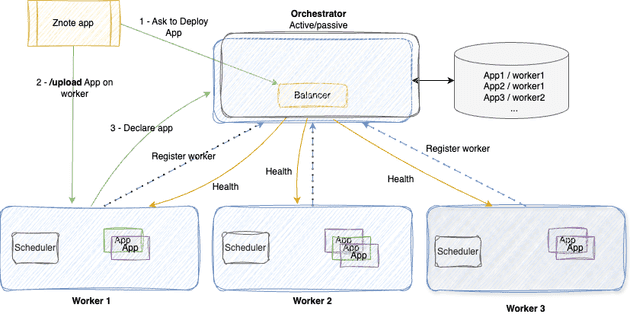

The architecture of my cluster is based on two key components:

- The Orchestrator: The brain of the cluster, it manages requests, directs traffic, and administers APIs.

- The Workers: The muscle of the cluster, they execute the uploaded applications and communicate with the orchestrator.

Figure 1: Simplified Architecture of the Cluster

Figure 1: Simplified Architecture of the Cluster

The orchestrator operates in an active/passive mode to simplify management and ensure high availability. A load balancer distributes the applications to deploy across the workers based on node utilization and load. The workers self-register with the orchestrator, allowing for autonomous operation.

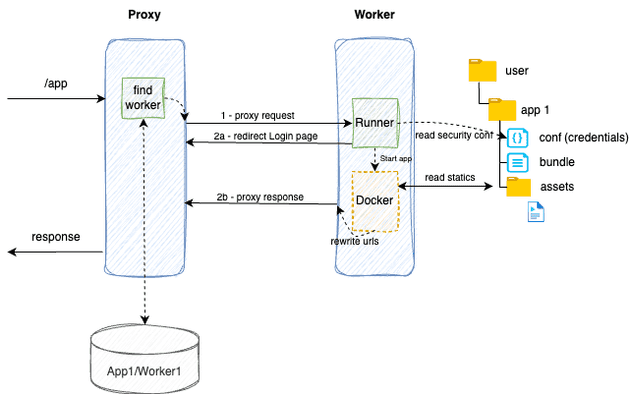

Proxy and Container Management

The key to the performance and efficiency of my cluster lies in how proxies and Docker containers are managed. Here are some strategies I implemented:

- Smart Proxy Redirections: By manually managing proxy redirections, I was able to include custom security controls for each application and optimize resource usage to serve HTML resources with dynamic URL rewriting.

Figure 2: Proxy Redirection Management for the Cluster Architecture

Figure 2: Proxy Redirection Management for the Cluster Architecture

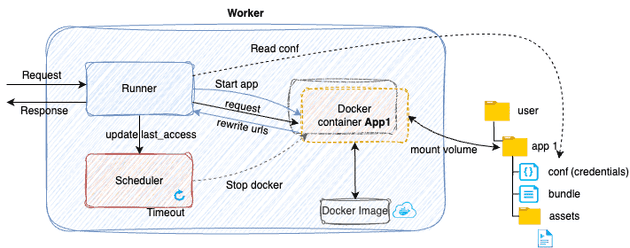

- Docker Container Isolation: Each backend application (Cron, API) is isolated in its own Docker container, using the same hardened read-only image to minimize storage space.

Figure 3: Docker Container Management for Application Execution

Figure 3: Docker Container Management for Application Execution

- Dynamic Resource Optimization: Unused containers are stopped to free up resources and restarted on demand, ensuring efficient resource utilization.

Practical Example

When a request comes in, the worker determines whether it's for a web application (HTML) or an application requiring a container (NodeJS). If a Docker container is needed, the worker checks if it’s already running on the cluster or needs to be started. This approach ensures that resources are used only when necessary.

Cutting Costs While Maintaining Maximum Power

I made several strategic choices to cut costs while maintaining the power and flexibility of the cluster:

- Manual Proxy Management: By handling redirections manually, I could include custom security controls for each deployed application.

- Read-Only Docker Usage: Using the same read-only Docker image minimized storage consumption, simplified environment management, while controlling security by maintaining control of the packages installed in the image.

- Dynamic Container Management: By stopping unused containers, I could free up resources and reallocate them on demand, ensuring optimal resource utilization.

Conclusion

In conclusion, creating this entire cluster for my Notebook app allowed me to develop a complete solution for deploying applications, dashboards, and automations. This project not only helped me control costs, but also taught me how to manage the complexity of a multi-tenant cluster efficiently and cost-effectively while delivering the best and most robust service I can to my users.

Thank you for following along on this technical journey! If you have any questions or comments, feel free to share them.